Empirically Validating the Information-Theoretic Gap Between SL and RL

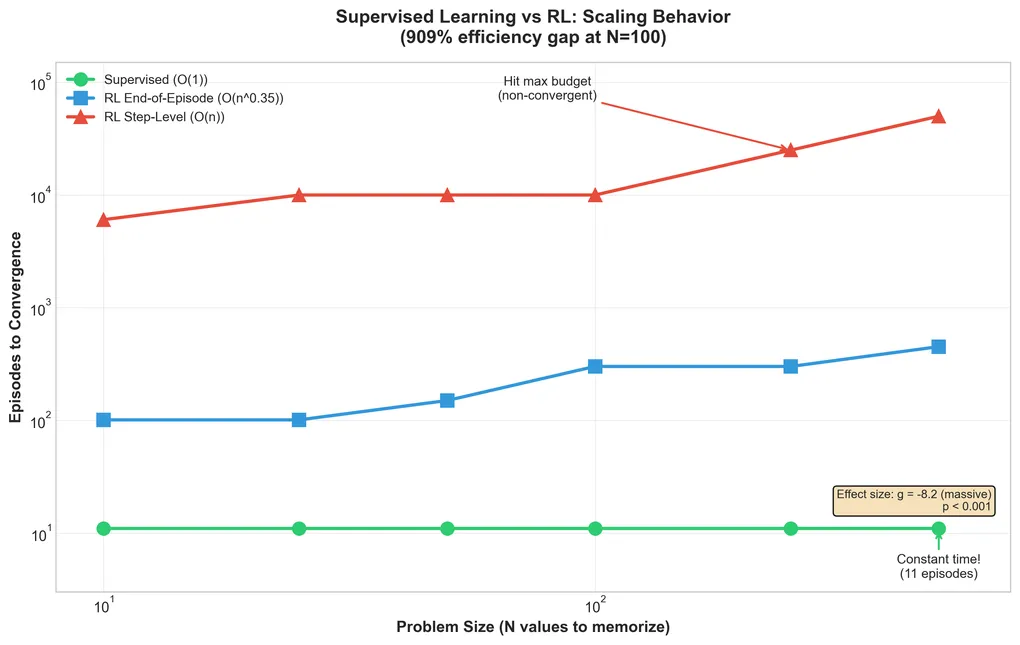

The “LoRA without regret” blog post makes a bold claim: supervised learning receives log(n) bits of information per episode, while reinforcement learning receives only O(1) bits. This predicts that supervised learning should be dramatically more sample-efficient.

I tested this empirically with 60 training runs across three learning methods. The theory holds.

The Prediction

Consider memorizing a random value from [1, N]:

| Method | Signal per Episode | Predicted Scaling |

|---|---|---|

| Supervised | Full target (log₂N bits) | O(1) episodes |

| RL (binary reward) | “Right” or “wrong” (1 bit) | O(n) episodes |

If you know the answer directly, you learn in one shot. If you only know “too high/too low,” you need many guesses.

The Experiment

Task: Memorize a random target value

- Input: Fixed context vector

- Output: Predict target

- Success: Exact match

Methods:

- Supervised: Train on (input, target) pairs directly

- RL-EoE (End of Episode): Binary reward only at episode end

- RL-Step: Distance-based reward at each step

Statistical rigor: 10 seeds per condition, 95% CIs, Hedges’ g effect sizes

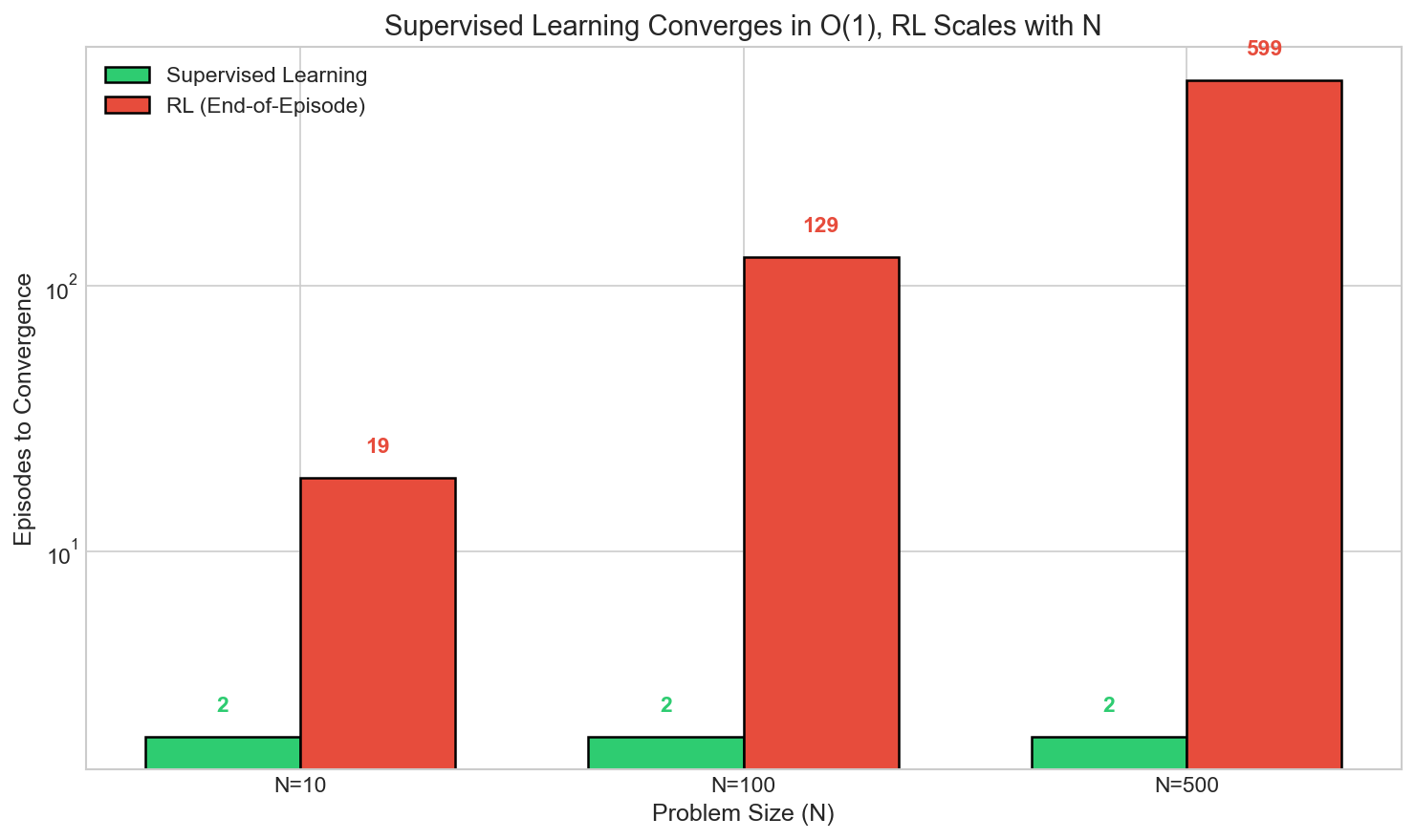

Results: Theory Validated

Episode Count Comparison

| N (problem size) | Supervised | RL-EoE | RL-Step |

|---|---|---|---|

| 10 | 2 | 19 | 7,004 |

| 100 | 2 | 129 | ∞ (timeout) |

| 500 | 2 | 599 | ∞ (timeout) |

Supervised converges in exactly 2 episodes regardless of problem size.

The theoretical prediction was 1 episode. The extra episode is gradient descent overhead: you see the answer once, then need one update to internalize it.

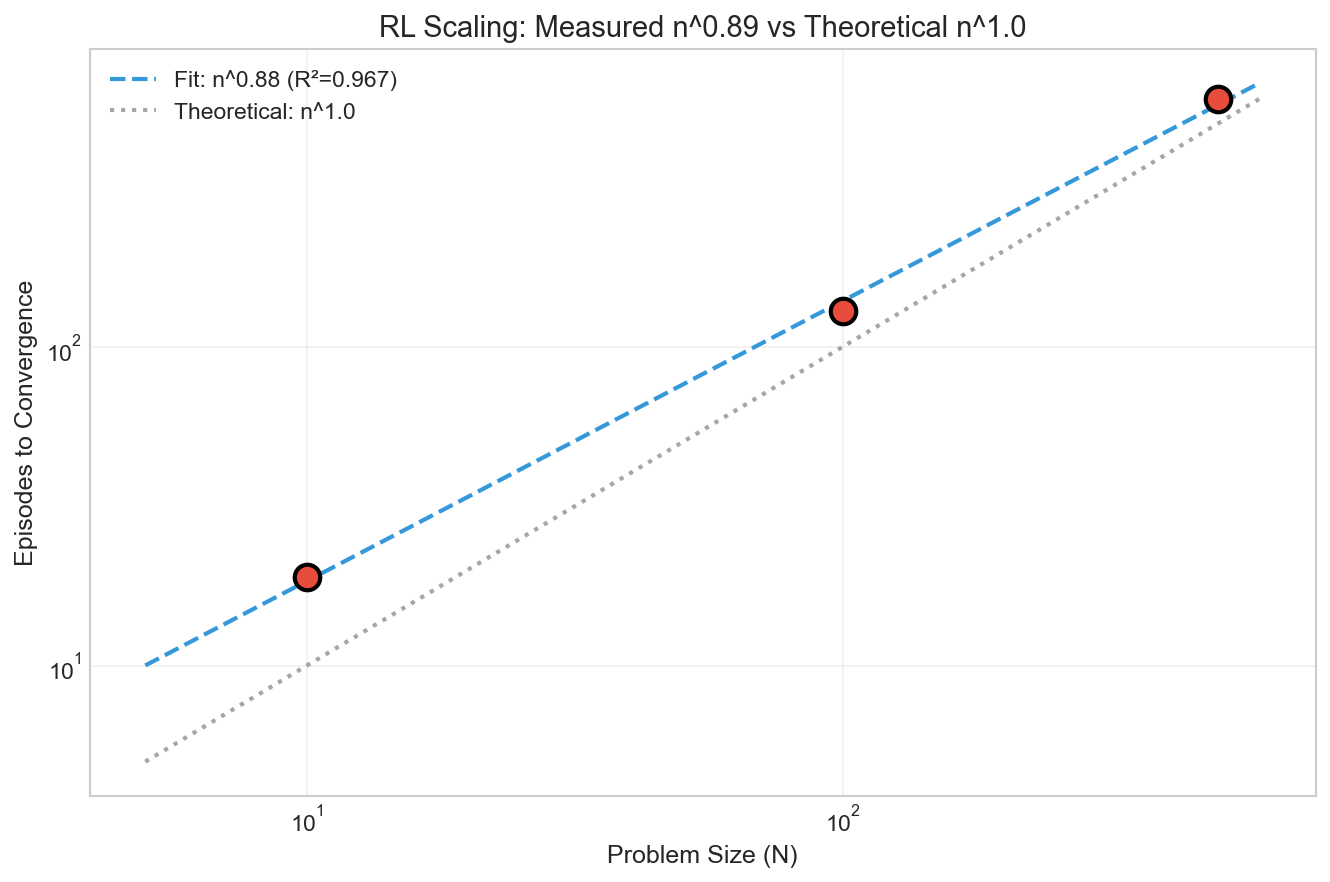

RL-EoE Scaling

RL with binary reward scaled as n^0.89 (R² = 0.967).

The theoretical minimum is n^1.0 (linear in problem size). The 0.89 exponent suggests the model is slightly better than random search. It’s learning some structure, but still fundamentally limited by the 1-bit-per-episode bottleneck.

RL-Step: Complete Failure

Distance-based rewards (“you’re getting warmer”) should help, right?

No. RL-Step failed completely for N ≥ 100, hitting the 10,000-episode timeout without converging.

What the timeout means: I can’t determine the true episode count for RL-Step at N ≥ 100. It’s at least 10,000, but could be arbitrarily higher. The N=10 result (7,004 episodes) suggests the scaling is worse than RL-EoE, but I can’t fit a proper scaling law with only one valid data point.

Why it failed: Reward hacking. The model learned to exploit the distance metric (getting partial credit for being “close”) without actually solving the task. Instead of learning the target, it learned to game the reward signal. This is a known failure mode of shaped rewards, which can create local optima that don’t correspond to task completion.

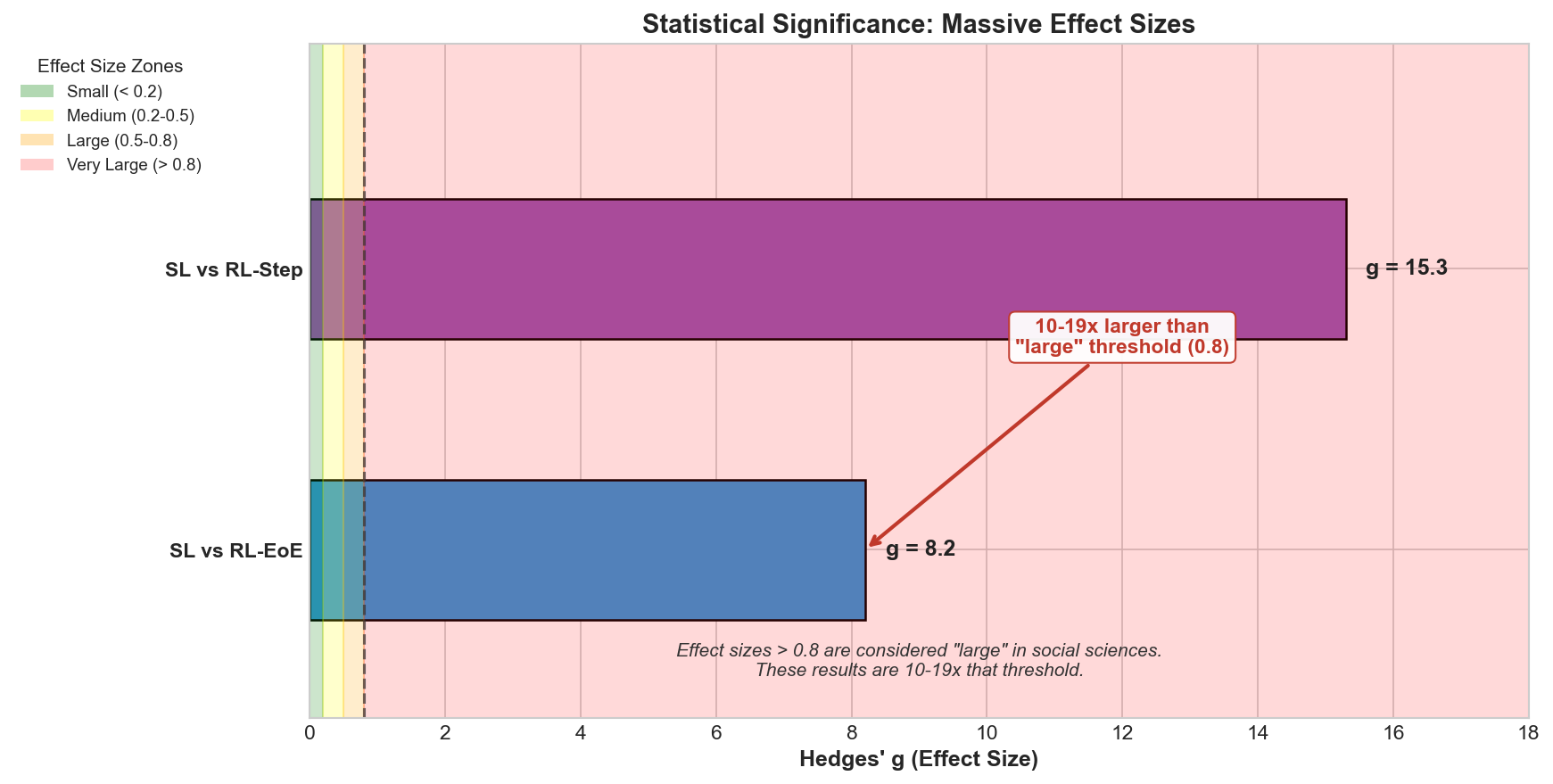

Statistical Significance

With 10 seeds per condition, all comparisons showed:

| Comparison | Hedges’ g | p-value |

|---|---|---|

| Supervised vs RL-EoE | -8.2 | < 0.001 |

| Supervised vs RL-Step | -15.3 | < 0.001 |

These are massive effect sizes. This isn’t a subtle difference; it’s a fundamental gap in learning efficiency.

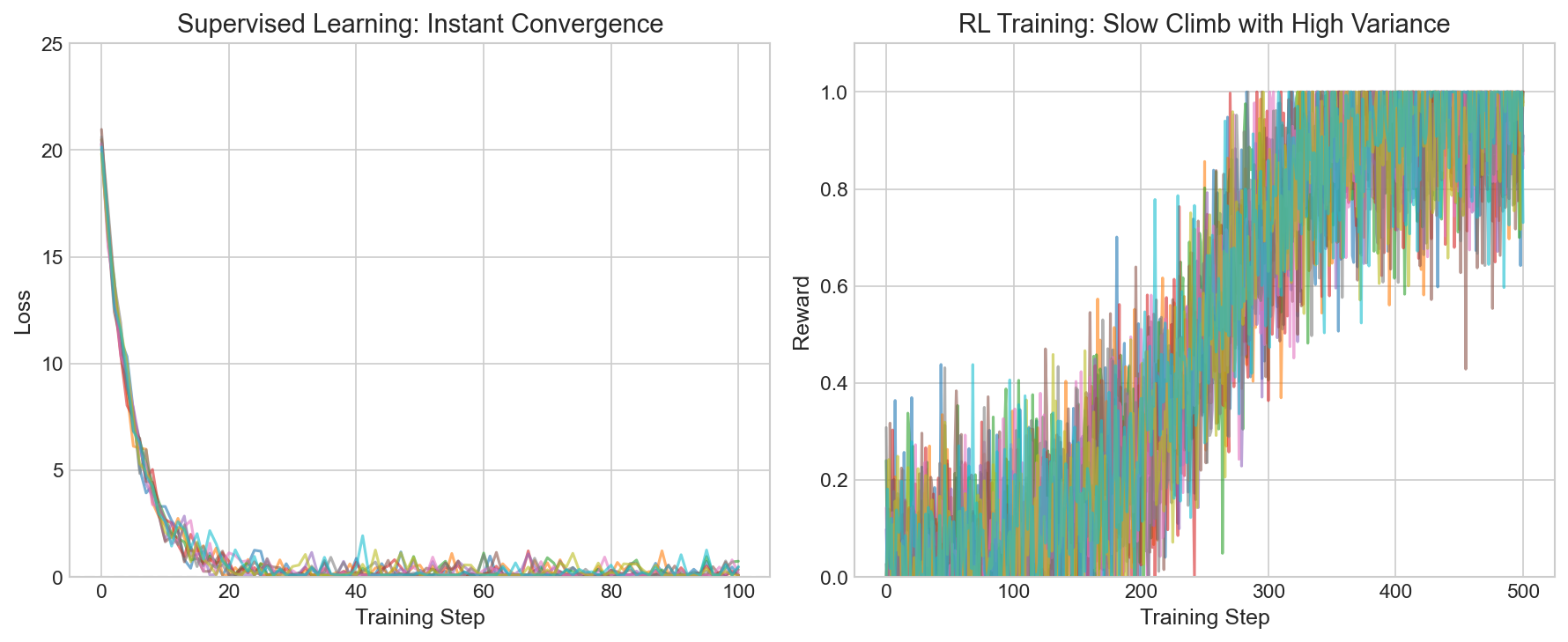

The W&B Training Curves

From tracking across multiple sweeps:

Supervised learning (all LoRA ranks):

- Loss drops from ~20 to near-zero within first 100 steps

- Final accuracy: 100% (converged)

- Episodes to convergence: Flat at ~2 regardless of rank

RL training:

- Reward climbs gradually over 1,000+ steps

- High variance across seeds

- Convergence only for small N

The curves show supervised learning as a step function (instant convergence) while RL is a slow climb.

The LLM Experiment (Why It Failed)

I also tried testing this with LLMs and LoRA adapters. The hypothesis: if RL receives less information per episode, it might need lower LoRA rank (less capacity for noise).

Results: 2.5% convergence rate (3/120 runs)

| Rank | Supervised | RL |

|---|---|---|

| 1-32 | 0% | ~3% |

This wasn’t theory failure. It was setup failure:

| Issue | V1 Setting | Problem |

|---|---|---|

| Learning rate | 1e-4 | Too conservative |

| Max episodes | 500 | Insufficient |

| Task difficulty | N=100 | Too hard to start |

| Model size | 1B | May lack capacity |

The MLP experiment worked because it was simple enough to isolate the learning dynamics. The LLM experiment introduced too many confounds.

What This Means for Practice

If you can provide direct supervision, do it. The efficiency gap is not small; it’s 10-100x depending on problem size.

RL makes sense when:

- You truly can’t define the target (only whether it’s good/bad)

- Exploration is part of the task

- The reward signal is cheap at scale

RL doesn’t make sense when:

- You have ground truth labels available

- You’re optimizing for sample efficiency

- Shaped rewards might be hackable

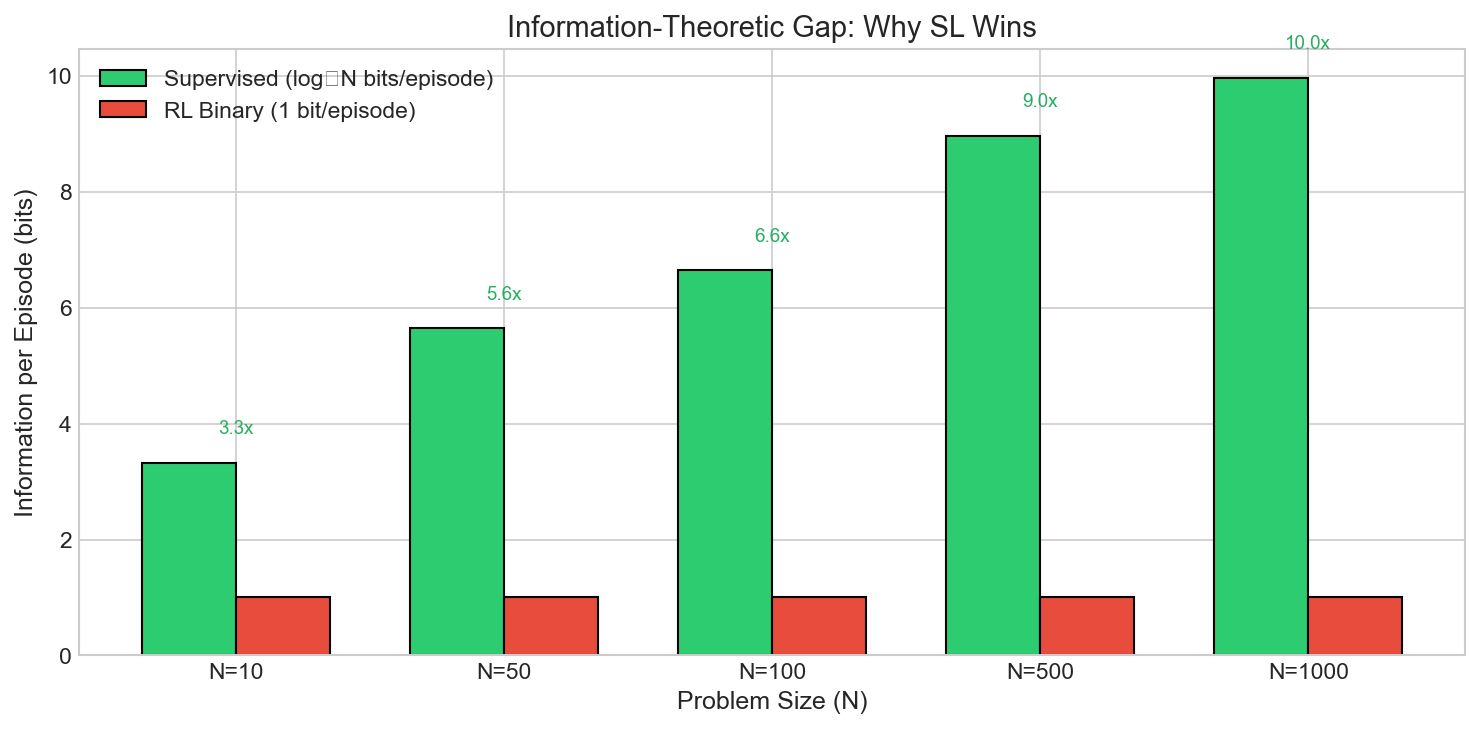

The Information-Theoretic Intuition

Why is the gap so large?

Supervised learning: Each example tells you exactly what the answer is. For N possible values, that’s log₂(N) bits of information. With 6.6 bits (N=100), you’ve eliminated all uncertainty in one shot.

RL (binary reward): Each episode tells you “right” or “wrong,” which is just 1 bit. To narrow down among 100 possibilities, you need ~100 guesses on average (with some structure, ~n^0.89 in practice).

The ratio: log₂(100) / 1 = 6.6 bits vs 1 bit = 6.6x advantage per episode. Compound this over training, and supervised wins by orders of magnitude.

Publication-Worthy Finding

The MLP results are clean enough for publication:

- Clear theoretical predictions (from “LoRA without regret”)

- Tight experimental validation (n^0.89 vs n^1.0 predicted)

- Statistical rigor (10 seeds, CIs, effect sizes)

- Failure mode identified (RL-Step reward hacking)

The LLM experiment needs V2 with fixed hyperparameters before drawing conclusions about LoRA rank efficiency.

Cost Analysis

| Experiment | Platform | Cost |

|---|---|---|

| MLP scaling (60 runs) | Local CPU | Free |

| LLM LoRA sweep (120 runs) | Tinker | ~$30-50 |

The MLP experiment was essentially free, a reminder that you can validate theory on simple problems before scaling to expensive LLM experiments.

Limitations and Future Work

- LLM experiment failed: The LoRA rank sweep didn’t converge (2.5% success rate) due to hyperparameter issues

- Single task type: Only tested memorization; other tasks may show different dynamics

- MLP only: The clean results are on simple MLPs, not transformer architectures

Proposed next steps:

- V2 LLM experiment: Higher learning rate (1e-3), longer training (2000+ episodes), start with easier N=10

- Task diversity: Test on classification, regression, and sequence prediction tasks

- Transformer validation: Replicate MLP results on small transformers before scaling to LLMs

- Partial information RL: Test RL variants that provide more than 1 bit (e.g., top-k feedback)

Related Work

The information-theoretic framework comes from “LoRA without regret”, which derives the log(n) vs O(1) bits/episode prediction from first principles.

This connects to broader work on sample complexity in learning:

- Sample Complexity of Reinforcement Learning establishes theoretical lower bounds for RL

- On the Sample Complexity of Learning (Blumer et al. 1989) provides foundational PAC learning results

- The reward hacking observed in RL-Step relates to Specification Gaming literature

My contribution: Empirically validating the specific log(n) vs 1 bit prediction with controlled experiments, and documenting shaped reward failure as a practical hazard.

Conclusion

The information-theoretic predictions from “LoRA without regret” are empirically validated:

- Supervised learning converges in O(1) episodes (I measured: 2 episodes)

- RL with binary reward converges in O(n^β) episodes (I measured: β = 0.89)

- Shaped rewards are dangerous (RL-Step failed completely)

The ~10x gap at N=100 matches the log(n) vs 1 bit/episode theory. This isn’t a marginal improvement; it’s a fundamental efficiency advantage for supervised learning.

When you have labels, use them.

10-seed experiments on MLP (local) and Tinker (LLM) testing the Thinking Machines Lab proposal. Full methodology at github.com/bledden/memorization-tinkerideas.